Manage a cloud data source

This article describes how to manage a cloud data source.

Supported vendors

The configuration for cloud data sources is nearly the same for every vendor. For vendor-specific configuration details, see the following:

Create a cloud data source

To create a cloud data source, use the following steps:

- Go to Sources > Data Sources.

- Click + Add Data Source.

- Under Categories, click Data Warehouse and select a vendor.

- In the Name field, enter a unique name for the data source related to your use case.

- Click Continue.

Establish a connection

Before you configure the data to import, you must establish a connection to your cloud data source. A connection is the reusable configuration of your vendor credentials that connects Tealium to your cloud data source.

On the Connection Configuration screen, confirm the name of the data source, then select an existing connection from the list or create a connection by clicking the + icon.

Click Save to return to the Connection Configuration screen, then click Establish Connection.

After you successfully establish a connection, select a table from the Table Selection list.

For more information about connecting to your vendor, see:

Enable processing

Turn on Enable Processing to begin importing data immediately after you save and publish your changes. Or, leave this setting off while you complete the configuration and turn it on later.

Configure the query

In the Query Mode and Configuration screen, select the appropriate query mode and optionally include a SQL WHERE clause to import only those records that match your custom condition.

Select a query mode

The query mode determines how to select new rows, modified rows, or both for import.

- If you select Timestamp + Incrementing (recommended) you must select two columns, a timestamp column and a strictly incrementing column.

- If you select Timestamp or Incrementing, you must select a column to use to detect either new and modified rows or new rows only.

For more information, see Query modes.

Configure the query

-

In the Query > Select Columns section, select the columns to import. To change the table or view, click Previous and select a different table.

-

(Optional) To add a custom condition, include a SQL

WHEREclause.The

WHEREclause does not support subqueries from multiple tables. To import data from multiple tables, create a view and select the view in the data source configuration. -

Click Test Query to validate your SQL query and preview the results.

Map columns

Use the column mapping table to map pre-configured column labels to event attributes or manually enter the column labels for mapping. Columns not mapped to an event attribute are ignored.

For each column label, select the corresponding event attribute from the list.

Map a visitor ID

To use your cloud data source with AudienceStream, map a column to a visitor ID attribute. Select a column that represents a visitor ID and map it to the corresponding visitor ID attribute.

Visitor ID Mapping in AudienceStream is enabled by default. Disabling visitor ID mapping may cause errors in visitor stitching. For more information, see Visitor Identification using Tealium Data Sources.

Summary

In this final step, view the summary, make any needed corrections, and then save and publish your profile. To edit your configuration, click Previous to return to the step where you want to make changes.

- View the event attribute and visitor ID mappings.

- Click Finish to create the data source and exit the configuration screen. The new data source is listed in the Data Sources dashboard.

- Click Save/Publish to save and publish your changes.

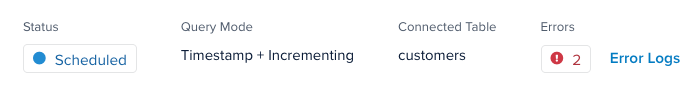

Processed rows and errors

To see import activity, navigate to Data Sources and expand the data source.

Statuses

After configuring a cloud data source, it may display one of the following statuses:

| Status | Description |

|---|---|

| Failed | A connection error has occurred, such as an authentication failure, and imports are halted until the error is resolved. Row-level errors during an import do not trigger this status and are logged while the data source remains in Running. |

| Inactive | The data source was created but was never turned on or transitioned to any other status. |

| Initializing | The connector is starting for the first time or resuming from a Stopped state. This is a temporary state before transitioning to Running or Scheduled. |

| Running | The connector is actively querying and importing data. |

| Scheduled | The next import is scheduled to run. This state can follow Initializing or Running. |

| Stopped | The data source was previously enabled but is now turned off. No data imports occur until it is enabled. |

| Unassigned | The task is awaiting allocation to a cloud worker. |

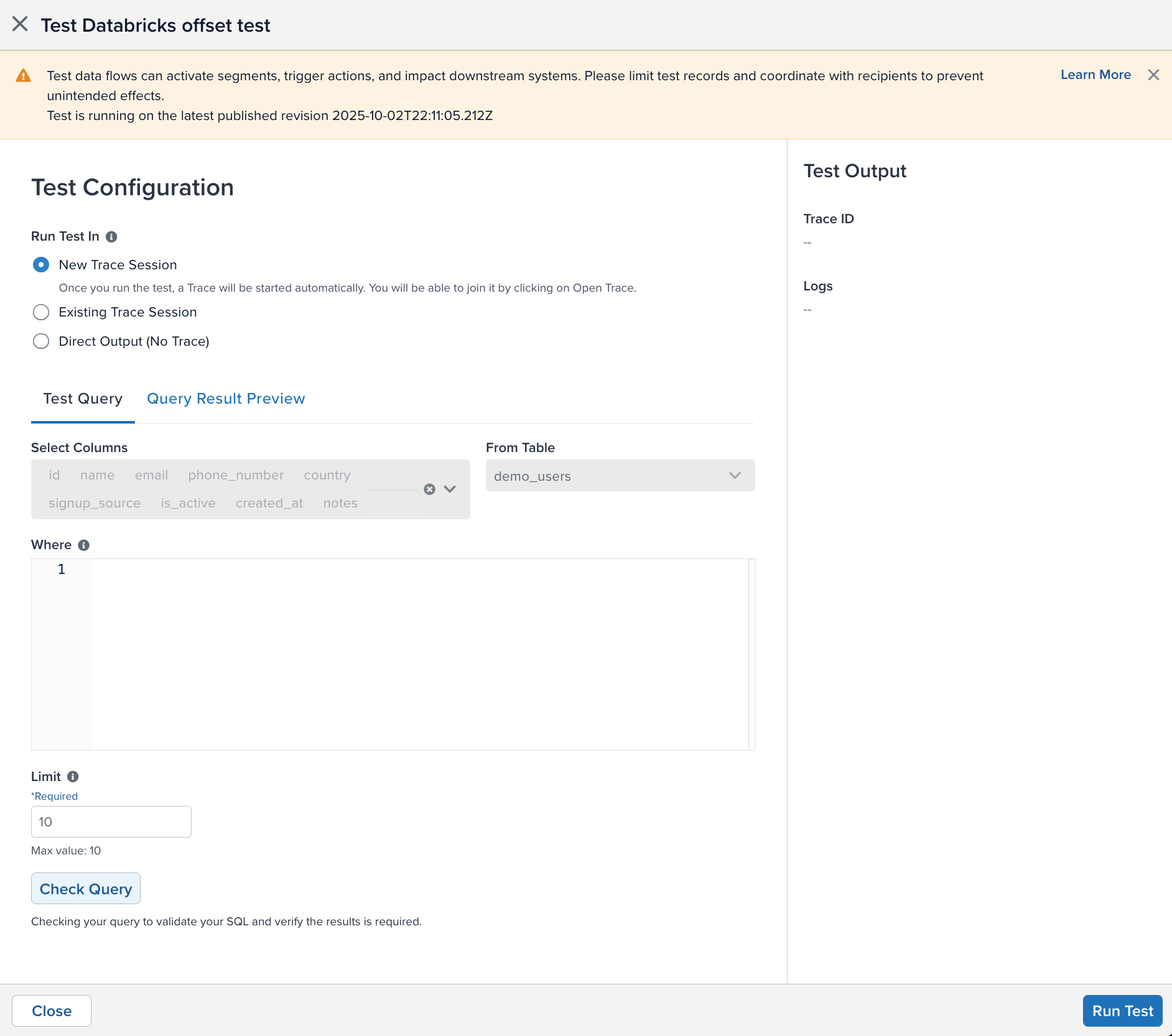

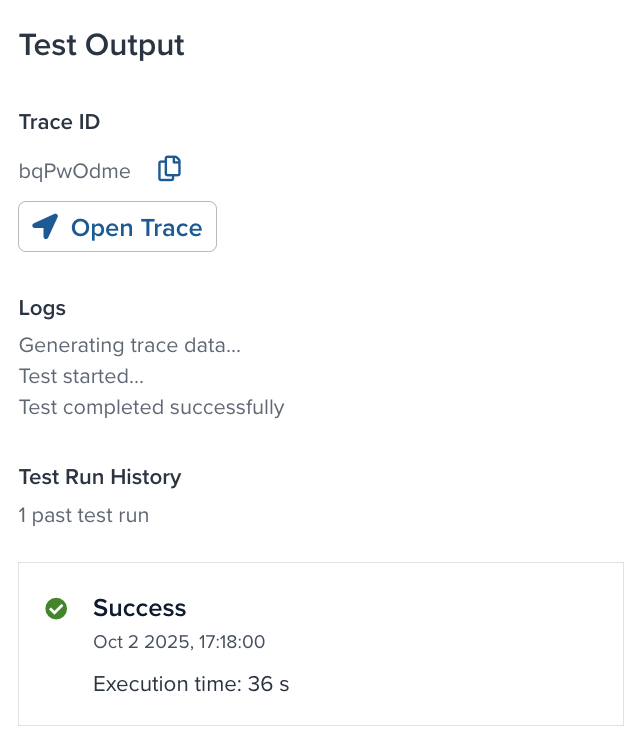

Test configuration

We recommend that you test the data source and query before enabling data sources, segments, or activations. However, running a test may activate segments, trigger enabled actions, or affect downstream systems. To prevent unintended results, disable connectors and functions, limit test records, and coordinate with recipients.

To test your data source configuration, use the following steps:

- Locate your data source in the Data Sources screen and click the edit icon.

- Click the action button in the upper-right corner of a data source window, and then click End-to-end Testing.

- Select how you want to receive the output:

- New Trace Session: The output is displayed in a new trace session with up to 10 records. This option is best for confirming end-to-end verification and log details.

- Existing Trace Session: The output is displayed in a trace session you have already started. Enter the Trace ID and click Join Trace.

- Direct Output: The raw results from the output is displayed on the screen. No trace ID will be added to the data records and trace will not be available. This option is best for quickly confirming the query and attribute mapping.

- Select the number of rows to process for the test. The maximum number of rows is 10.

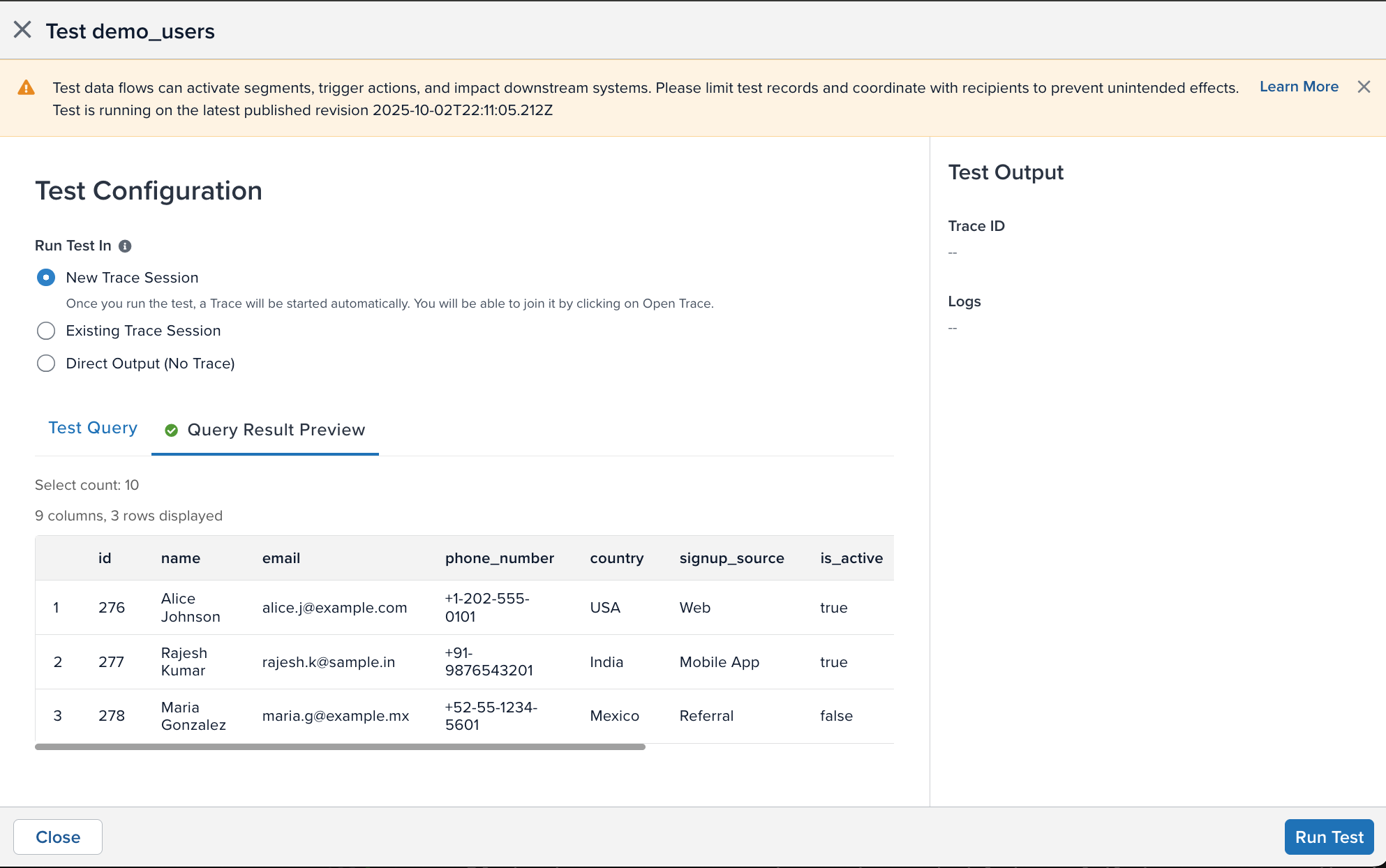

- Under Test Query, select the columns from the table to include in the test. Click the X in a column to remove it from the list. You must select at least one column.

- Under From Table, select the table you want to query. The Select Columns box will update with the table’s columns.

- Under Where, enter the SQL query to perform on the table.

- Click Check Query to validate the SQL and verify that required fields are set.

- The results will appear in a table under the Query Result Preview tab:

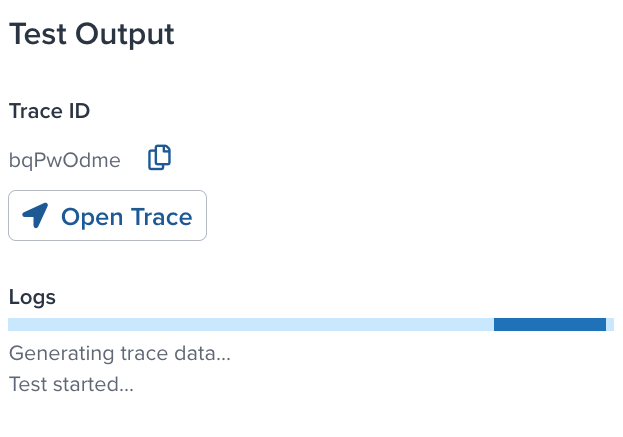

- Click Start Test to start the configuration test.

The right sidebar will display a progress bar that estimates the amount of time to finish the test and status messages to let you know if the test has encountered any errors.

When the test is complete, the results are displayed.

- If you want to watch the test run in a trace, click Join Trace.

- If the test fails, you can do the following:

- Click Edit Test Configuration to change the configuration settings.

- Click Retry Test to run the current configuration again.

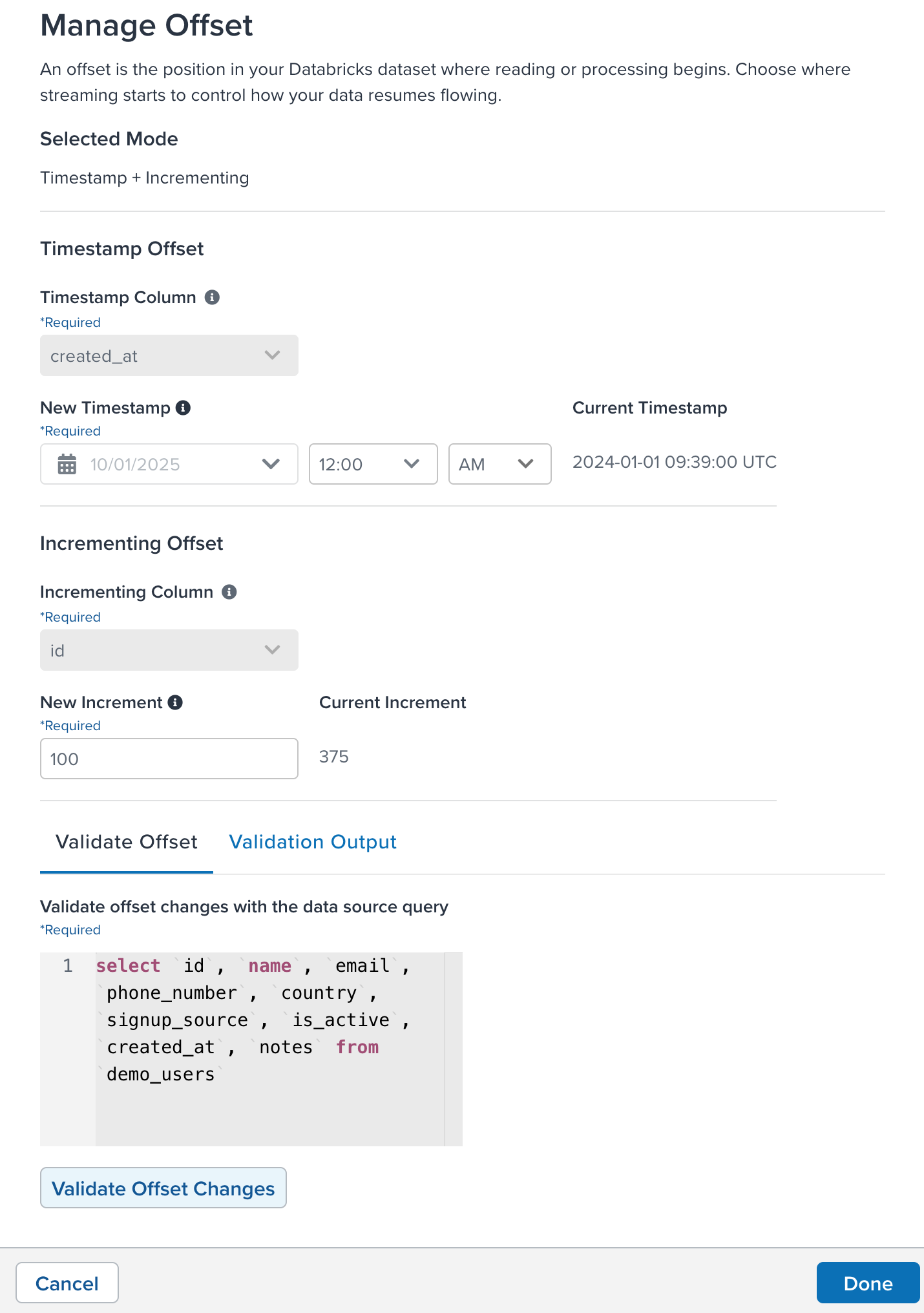

Set processing start

Data sources track the date or incrementing value position where querying begins in your cloud data view or table. You can reset or manually set that position to control where in the view or table to start querying.

For example, suppose a recent mailing list activation contained an error and processed 100 records, and now the current incrementing position is 342. To reprocess those records after correcting the email activation, set the start point to 242. When you restart the data source, it queries records starting from that position and sends the corrected email.

You can only manage the start point if the following conditions are true:

- The current profile is published.

- The query mode is Timestamp + Incrementing (Recommended), Timestamp, or Incrementing. You cannot manage the start point for Full Resync query mode.

- The data source is stopped.

- If the data source status is running, scheduled, or failed, you cannot edit the start point. Only information about the current start point is available.

- If the status is initializing, inactive, or there is a connection error, the start point is not available.

To manage the start point for the data source, use the following steps:

- Locate your data source in the Data Sources screen and click the edit icon.

- Click the action button in the upper-right corner of the data source details window and then click Set Processing Start.

- The start point methods available are Processing Start Timestamp and Incrementing Start Point. Your query mode determines which start points are available.

- Under Timestamp Column, select the column in the table that represents the timestamp.

- Under New Timestamp, select a date and time to use as a starting position when importing the data.

- The new timestamp must be a past time and date. It cannot be a future date and time.

- The current timestamp field displays the currently used starting position.

- Under Incrementing column, select the column that represents the incrementing value for each row added to the table.

- Under New Increment, enter a number to use to set the start point when importing the data.

- The new start point must be a positive integer.

- The current start point field displays the currently used starting position to use as a start point.

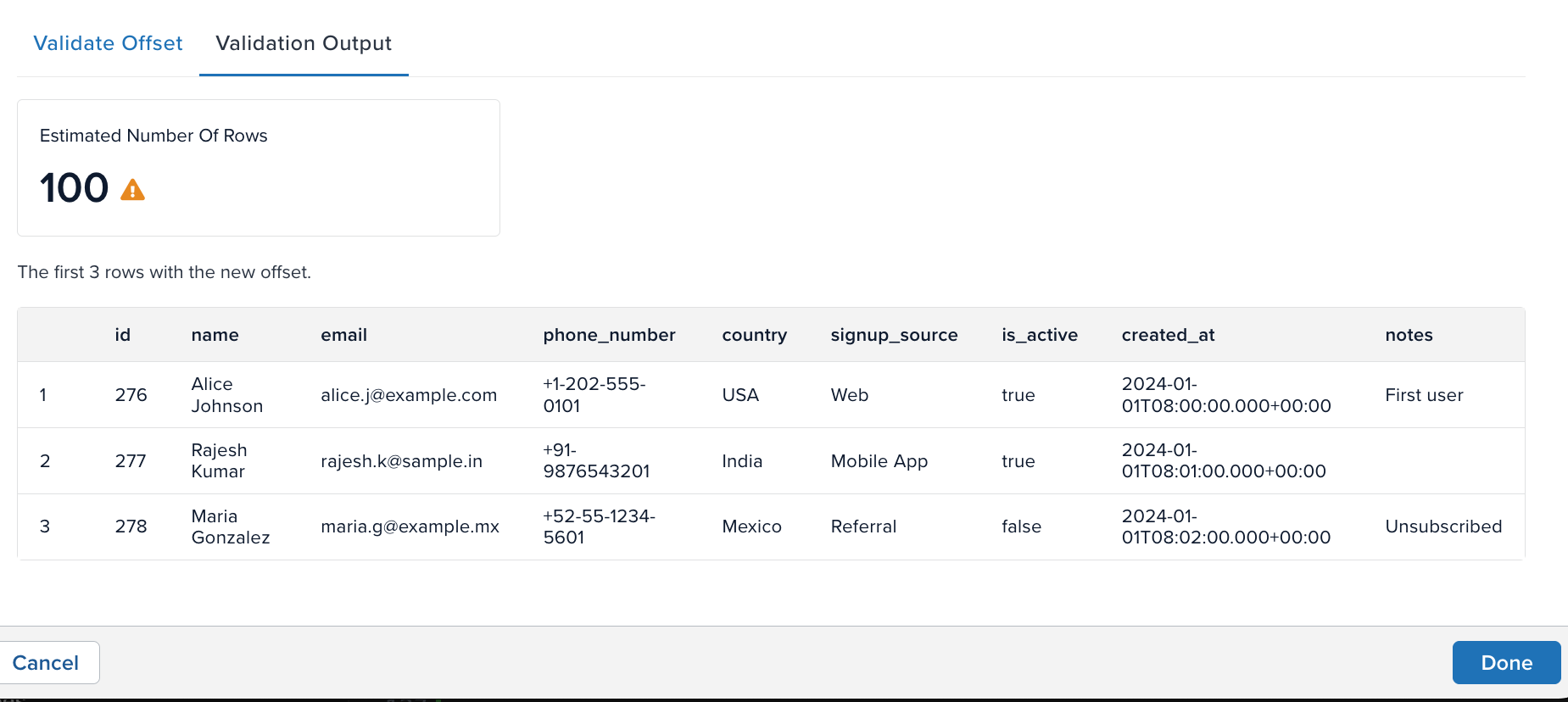

Click Validate Processing Start Changes to preview the data that will be imported from the new start point. The table shows sample rows. It also provides an estimate of how many rows will be processed after you adjust the start point.

Click Done to confirm the new start point settings. Click Cancel to discard your changes. Restart the data source after changing the start point.

This page was last updated: February 6, 2026